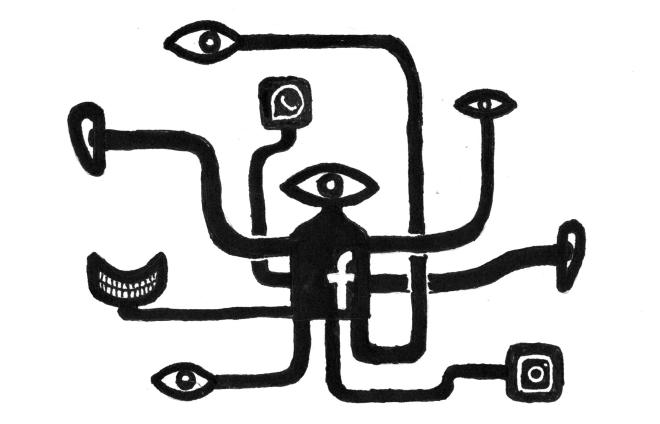

Social networks

I’ve written before about how the business model of Facebook drives the algorithm, and the algorithm drives our current political situation in which lies and conspiracy theories are resurgent.

It turns out that the situation is worse than that.

Researchers at Indiana University Bloomington have built simulations of social networks, in which each node is an imaginary person with a random set of connections to other nodes. To model the real world, each simulated person has a limited attention span — they can only process a certain number of incoming messages. The messages are assumed to vary randomly in quality, and like in the real world, each person can choose to rebroadcast a message, or not. In the simulation, the decision whether to rebroadcast is random, rather than being driven by “virality” or cognitive bias, so the simulation is an optimistic one.

It turns out that message propagation follows a power law: the probability of a meme being shared a given number of times is roughly proportional to an inverse power of that number. This turns out to mean that as the number of messages in the network rises, the quality of those which propagate falls.

Another way to look at it: As soon as your news feed becomes too big for you to read all of it and then decide whether to repost anything, the quality of information you propagate is going to fall because of that filtering process. Give everyone the same problem, let everyone in the world talk to everyone else, and increase the overload by a factor of 10 to 100, and you have today’s information hellscape.